The Rise of Warm Water Cooling in Data Centre’s

Four months into my new Role at Red Oak Consulting, I have an opportunity to further research liquid cooling. While one of my favourite activities is liquid cooling of the IPA (Indian Pale Ale) kind, a Red Oak blog about beer apparently wasn’t OK! Therefore, I decided to write a few words about some of my previous experience, in both direct liquid and immersion cooling and co-lo and datacenter companies’ offerings in the space of liquid cooling.

With the latest innovations in CPU and GPU technology comes an increasing power requirement and consequent challenges of cooling IT infrastructure. “Top bin” CPUs from all silicon vendors are at time of writing between 280-400 Watts per socket. If you include 2 CPUs, memory and disks, you can easily reach 1kW in a 1U “pizza box” style server. This is even before you start adding accelerators like GPUs and FPGAs, some forthcoming designs in OAM form factor mentioned below.

Full population of contemporary datacentre racks leads to a possible 40 kW per 42U rack in standard 19” rack server form factors. To supply that 40 kW you are already looking at 2 x 3 Phase 32A Power Distribution Units, which all need to be contained within a standard rack size. OCP form factor is wider at 21” which has the potential of squeezing 3 nodes into a single U of rack height.

Furthermore, the trajectory of Thermal Design Power for chips only seems to be going north with OAM accelerator chips projected to easily reach 1000 W thermal design power in the next few years.

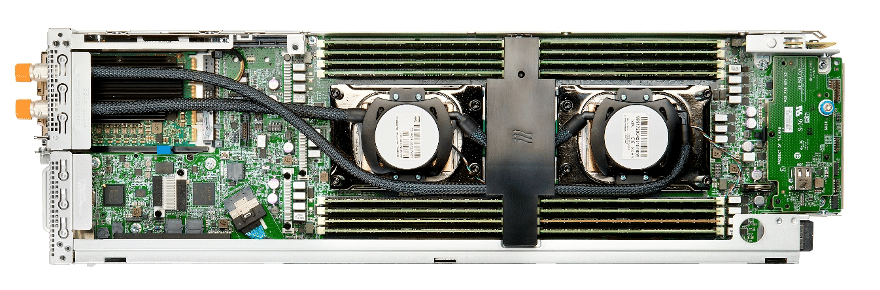

To explore higher density installations in datacentres, a Direct Liquid Cooled (DLC) PoC that I ran in my last job employed 400 W CPUs in a 46U rack. 8 CPUs per 2U. Each 2U server was consuming 3.2 kW amounting to nearly 80 kW in one rack. The POC had 3 x 63A 3 phase PDUs plus liquid distribution manifolds.

The system was accommodated in an 800mm wide by 1400mm deep cabinet to shoehorn all that additional infrastructure into the rack. Switches were mounted vertically making use of the wider 800mm space in the cabinet.

Cooling to the rack was provided via warm water cooling which does away with the need for chillers, relying instead on facility water that is delivered at the ambient temperature outside the building (or slightly above).

Warm water cooling for DLC is increasingly common up in tender requirements, requiring inlet temperatures up to 40 degrees Celsius.

For HPC installations, liquid cooling is an obvious solution to the issue and is not even a particularly new concept. Cray employed liquid cooling back in 1976 for their first supercomputer. They used a Freon chiller system which also doubled as a handy bench you can sit on. If you’re ever in London, there is a Cray 1 you can go and see in the Science Museum.

DLC has been in production for commodity “white box” and OEM server designs for several years now and increasingly the preferred choice for higher density HPC installations. Immersion cooling is gathering momentum, but I am still to be convinced that it is capable of effectively cooling the high thermal density parts mentioned above.

Deploying warm water liquid cooled racks in datacentres, whilst delivering improvements in efficiency and PUE, does come with challenges.

I recall a comment from a datacentre customer some years back saying “Not in my datacentre; liquid and electricity don’t go well together!” Designing and integrating liquid cooling systems require careful planning, as it involves additional infrastructure, such as coolant distribution units, pumps, and heat exchangers, all of which have the potential for leaks.

So, the question I have been wrestling with; if you are not going to host your HPC system in your own datacentre which necessitates designing your own cooling infrastructure, what is there “off the peg” in terms of high-density compute cooling solutions within the larger co-lo datacentre providers?

So, the question I have been wrestling with; if you are not going to host your HPC system in your own datacentre which necessitates designing your own cooling infrastructure, what is there “off the peg” in terms of high-density compute cooling solutions within the larger co-lo datacentre providers?

The large monolithic DLC solutions (e.g., HPE/Cray EX, Bull Sequana) have their own integrated cooling distribution designs and they routinely deploy 80 kW per rack and are confidently predicting they will be doing something north of 200 kW in the not-too-distant future.

In doing a bit of market research, the most that co-lo vendors seem to offer is 40kW per rack with forced air cooling. This is already the sweet spot power envelope for today’s HPC systems. If you are looking for a higher density and power DLC solution, then the cooling infrastructure required is still a bespoke solution rather than something you can just “plug” into.

The overheads of building, managing, and maintaining your own datacentre are significant. Add to this a capability to plumb servers into a DLC infrastructure then, its often an engineering challenge that HPC operators don’t want to undertake. Co-lo providers usually charge per kW for power, space, and cooling. Is it time then for them to add the option of DLC capability to their product offerings?

Ian Lloyd

Senior Principal Consultant – Red Oak Consulting