Introduction

It’s mid-2013 and I have just graduated with a physics degree. I really enjoyed the theoretical component, but the computational and experimental labs were rather uninspiring. I still wanted to do something more practical, with foreseeable benefits, but I was also afraid of specialising myself into oblivion.

It’s mid-2013 and I have just graduated with a physics degree. I really enjoyed the theoretical component, but the computational and experimental labs were rather uninspiring. I still wanted to do something more practical, with foreseeable benefits, but I was also afraid of specialising myself into oblivion.

A few months of speculative emails and applications for PhD places later, I accepted a project in Materials Characterisation, with both computational and experimental aspects.

Most importantly, for this article, the computational aspect involved High-Performance Computing (HPC). The years that followed placed me in an excellent position to understand how HPC and lab experiments go hand in hand.

What is Materials Characterisation?

Materials Characterisation is the process of understanding the chemical and physical properties of materials. It has been crucial for accelerated advancements in computing technology and therefore HPC during the last century.

The Eye-Watering Costs of Experimental Materials Characterisation

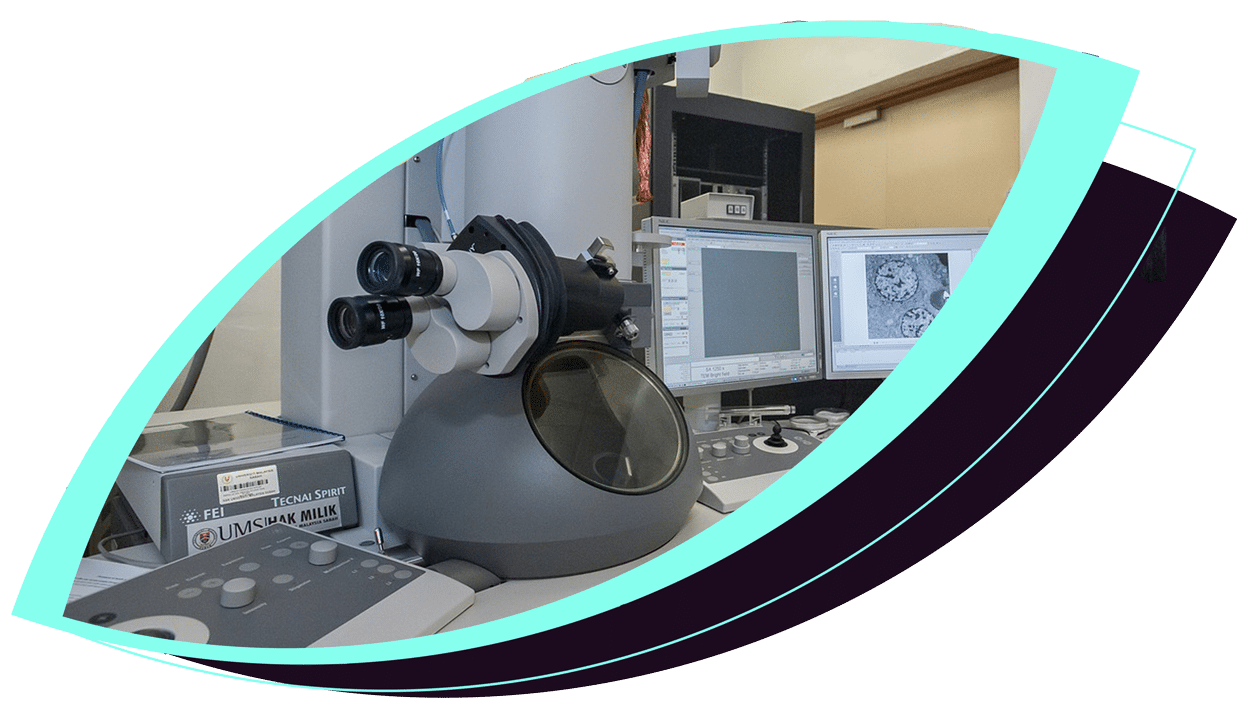

Modern Materials Characterisation involves lab experiments and computational calculations. In my case, the lab component involved a rather expensive piece of equipment known as a Transmission Electron Microscope (TEM). ‘Microscope’ may conjure up an image of me sitting on a desk and looking through a glass lens to have a ‘better’ look at something and this isn’t entirely inaccurate.

However, a modern TEM requires an entirely dedicated room to fit in and in some cases, the user is located in an adjacent room. In an optical microscope, we use light to closely examine an object.

In TEM, we instead use electrons to examine a material. A TEM can obtain sub-nanometer resolution, that is to say, individual atoms can be observed.

New TEMs can range from hundreds of thousands to millions of dollars, with the atomic scale kind I was using being towards the upper band of that range. A TEM cannot simply be turned off and requires constant power even when not in use.

Sample preparation is a whole other area to consider and can cost in the hundreds of dollars per sample and can take several hours.

Add to that how easy it is to break samples (samples need to be extremely small to see atoms) and the need for several samples during a project and I’m sure I don’t need to say more about the eye-watering costs involved.

The power of TEMs also makes them heavily sought after and particularly in academia, bookings are often required in advance. Experimental apparatus such as TEMs are extremely complex systems with many delicate components.

They inevitably malfunction and or breakdown from time to time and need to be repaired. In some cases, this can take months. I lost around a year during my PhD and a good 6 months during my PostDoc position due to this.

Hopefully, you are beginning to see that simply running numerous experiments is not only expensive but assuming you cannot afford a TEM for yourself, there will also be a lot of waiting involved in getting access to one and your access will be time-limited.

So, what can we do to reduce costs and fill in that time spent waiting in a useful manner? Well, one answer is HPC calculations.

A Theoretical Approach to HPC in Materials Characterisation

The theory behind atomic-scale interactions has been established for decades and even centuries depending on how you look at it.

The first theoretical method for Materials Characterisation which I made use of during my PhD was Molecular Dynamics (MD). MD bases atomic interactions on Newton’s laws of motion (hence ‘centuries’).

A mathematical model is associated with each atom (or other component) in a system which describes the force felt by other objects within its vicinity. When many such atoms are placed into a system, the individual mathematical models are combined to form a ’force field’.

Calculations can then be performed on the combined system to calculate the material’s properties. For a small number of atoms (typically single digits to tens of atoms), a desktop computer may suffice.

However, in reality, systems involve many atoms and useful calculations usually require hundreds of atoms or more to be simulated. This of course necessitates HPC.

However, in reality, systems involve many atoms and useful calculations usually require hundreds of atoms or more to be simulated. This of course necessitates HPC.

Although this force field approach suffices for many use cases, accurate treatment of atomic-scale interactions requires the application of the laws of quantum mechanics rather than Newton’s laws of motion.

Various methods which tackle this have been developed and are often referred to as ‘first principles’ or ‘ab-initio’ calculations. Of these methods ‘density functional theory’ (DFT) is most commonly used in Materials Characterisation.

This method was central to the computational aspect of my PhD work and due to additional complexities involved, HPC is crucial.

Simulating Experiments

Besides following a more theoretical approach to materials characterisation, running experimental simulations is also a common use for HPC.

This involves simulating the action of experimental apparatus on a particular material sample. Besides being cheaper and possibly faster than running the actual experiment, the simulated images produced by these simulations play an important role in interpreting real experimental images.

This is especially the case with TEM, where three-dimensional information is superimposed on the resulting two-dimensional image. Such images are easily misinterpreted by the untrained eye.

The increased understanding provided by experimental simulations may even inform how a lab experiment is performed.

Summary

To summarise, lab experiments and HPC are mutually beneficial. Lab experiments of course allow us to observe the real world but can be expensive and often lead to more questions than answers. Supporting HPC simulations can drive down costs and help better understand the results from lab experiments.

In most cases, lab experiments cannot be performed continuously due to competition for resources and equipment failure. In these situations, computational calculations can supplement experimental work and help further our understanding.

Manveer Munde

Principal Consultant

Red Oak Consulting